Supported Models in Refact.ai

Cloud Version

With Refact.ai, access state-of-the-art models in your VS Code or JetBrains plugin and select the optimal LLM for each task.

AI Agent models

- GPT 4.1 (default)

- Claude 3.7 Sonnet

- Claude 3.5 Sonnet

- GPT-4o

- o3-mini

Chat models

- GPT 4.1 (default)

- Claude 3.7 Sonnet

- Claude 3.5 Sonnet

- GPT-4o

- GPT-4o-mini

- o3-mini

For select models, click the 💡Think button to enable advanced reasoning, helping AI better solve complex tasks. Available only in Refact.ai Pro plan.

Code completion models

- Qwen2.5-Coder-1.5B

Configure Providers (BYOK)

Refact.ai gives you the flexibility to connect your own API key and use external LLMs like Gemini, Grok, OpenAI, DeepSeek, and others. For a step-by-step guide, see the Configure Providers (BYOK) documentation.

Self-Hosted Version

In Refact.ai Self-hosted, you can choose among 20+ model options — ready for any task. The full lineup (always up-to-date) is in the Known Models file on GitHub.

Completion models

| Model Name | Fine-tuning support |

|---|---|

| Refact/1.6B | ✓ |

| Refact/1.6B/vllm | ✓ |

| starcoder/1b/base | ✓ |

| starcoder/1b/vllm | |

| starcoder/3b/base | ✓ |

| starcoder/3b/vllm | |

| starcoder/7b/base | ✓ |

| starcoder/7b/vllm | |

| starcoder/15b/base | |

| starcoder/15b/plus | |

| starcoder2/3b/base | ✓ |

| starcoder2/3b/vllm | ✓ |

| starcoder2/7b/base | ✓ |

| starcoder2/7b/vllm | ✓ |

| starcoder2/15b/base | ✓ |

| deepseek-coder/1.3b/base | ✓ |

| deepseek-coder/1.3b/vllm | ✓ |

| deepseek-coder/5.7b/mqa-base | ✓ |

| deepseek-coder/5.7b/vllm | ✓ |

| codellama/7b | ✓ |

| stable/3b/code | |

| wizardcoder/15b |

Chat models

| Model Name |

|---|

| starchat/15b/beta |

| deepseek-coder/33b/instruct |

| deepseek-coder/6.7b/instruct |

| deepseek-coder/6.7b/instruct-finetune |

| deepseek-coder/6.7b/instruct-finetune/vllm |

| wizardlm/7b |

| wizardlm/13b |

| wizardlm/30b |

| llama2/7b |

| llama2/13b |

| magicoder/6.7b |

| mistral/7b/instruct-v0.1 |

| mixtral/8x7b/instruct-v0.1 |

| llama3/8b/instruct |

| llama3/8b/instruct/vllm |

Integrations

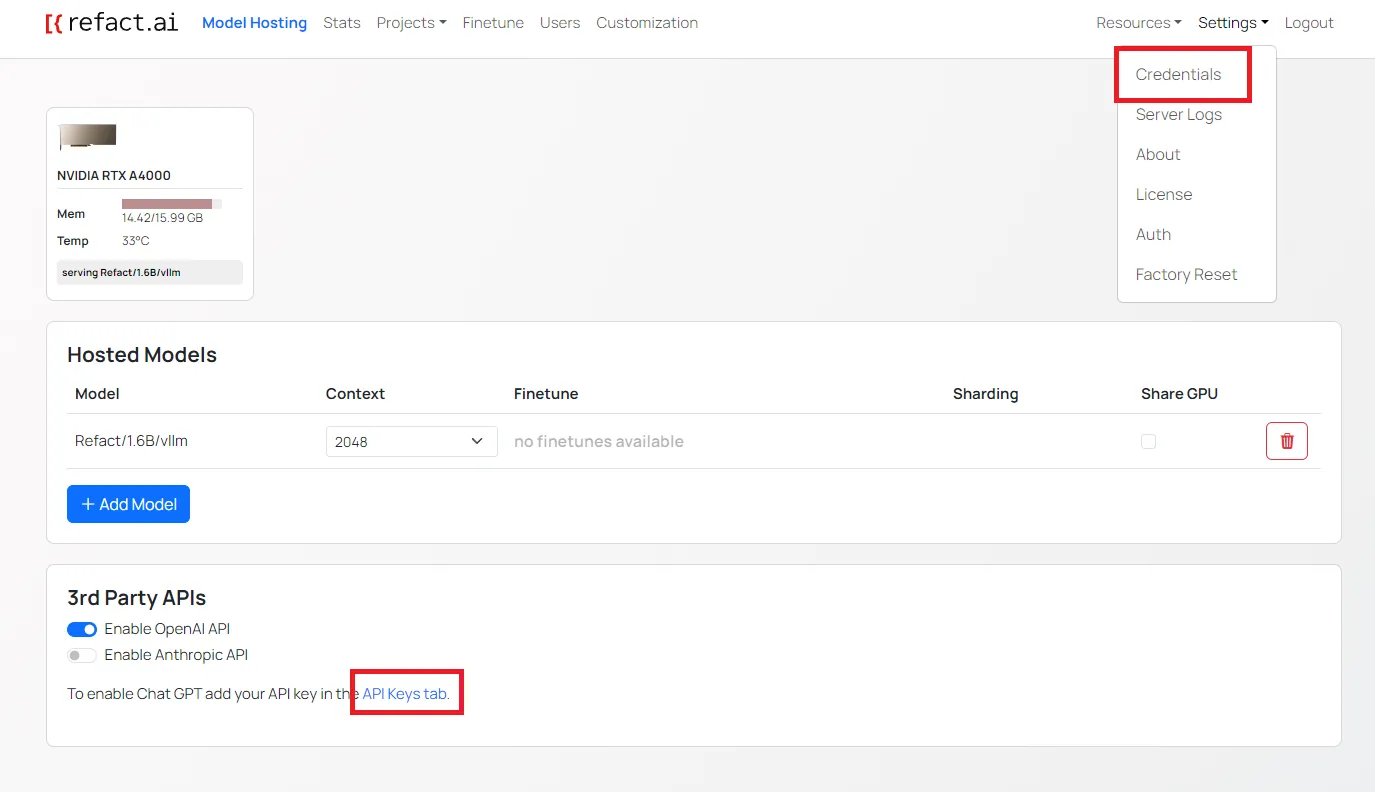

On a self-hosted mode, you can also configure OpenAI and Anthropic API integrations.

- Go to Model Hosting page → 3rd Party APIs section and toggle the switch buttons for OpenAI and/or Anthropic.

- Click the API Keys tab to be redirected to the integrations page (or go via Settings → Credentials)

- Enter your OpenAI and/or Anthropic key.